Abstract

This paper proposes a spherical model of cognition, drawing parallels between hierarchical information processing in the human brain and artificial neural networks (ANNs). I integrate anatomical, computational, and thermodynamic perspectives to elucidate how radial and lateral energy propagation underlie concrete and abstract cognition, and I highlight fundamental distinctions in agency between biological and artificial systems.

- Introduction

- Hierarchical Correspondence

- Radial Functional Hierarchy

- Energy Dynamics and Cognitive Effort

- Lateral Propagation and Agency

- Reasoning as Radial Movement

- Gradient Descent and Backpropagation

- Implications for Intelligence and Consciousness

- Predictions and Future Directions

- Conclusion

- References

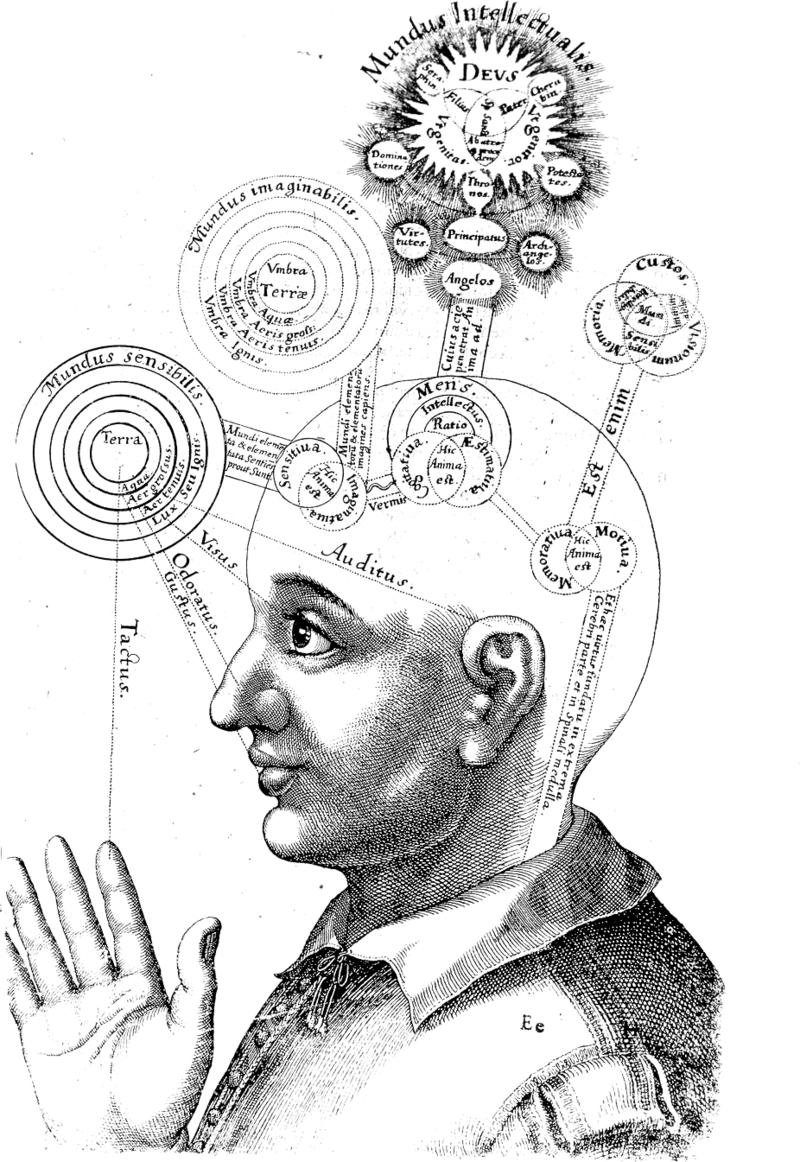

1. Introduction

Hierarchical processing—moving from simple, concrete, fragmented features to complex, abstract, consolidated representations—is a cornerstone of both neuroscience and deep learning. In ANNs, early layers detect low-level patterns and later layers compose these into high-level concepts. Likewise, the brain’s anatomy and physiology exhibit radial gradients from primary sensory cortices to central limbic and thalamic structures. I synthesize these observations into a unified spherical framework that emphasizes energy dynamics and agency.

2. Hierarchical Correspondence

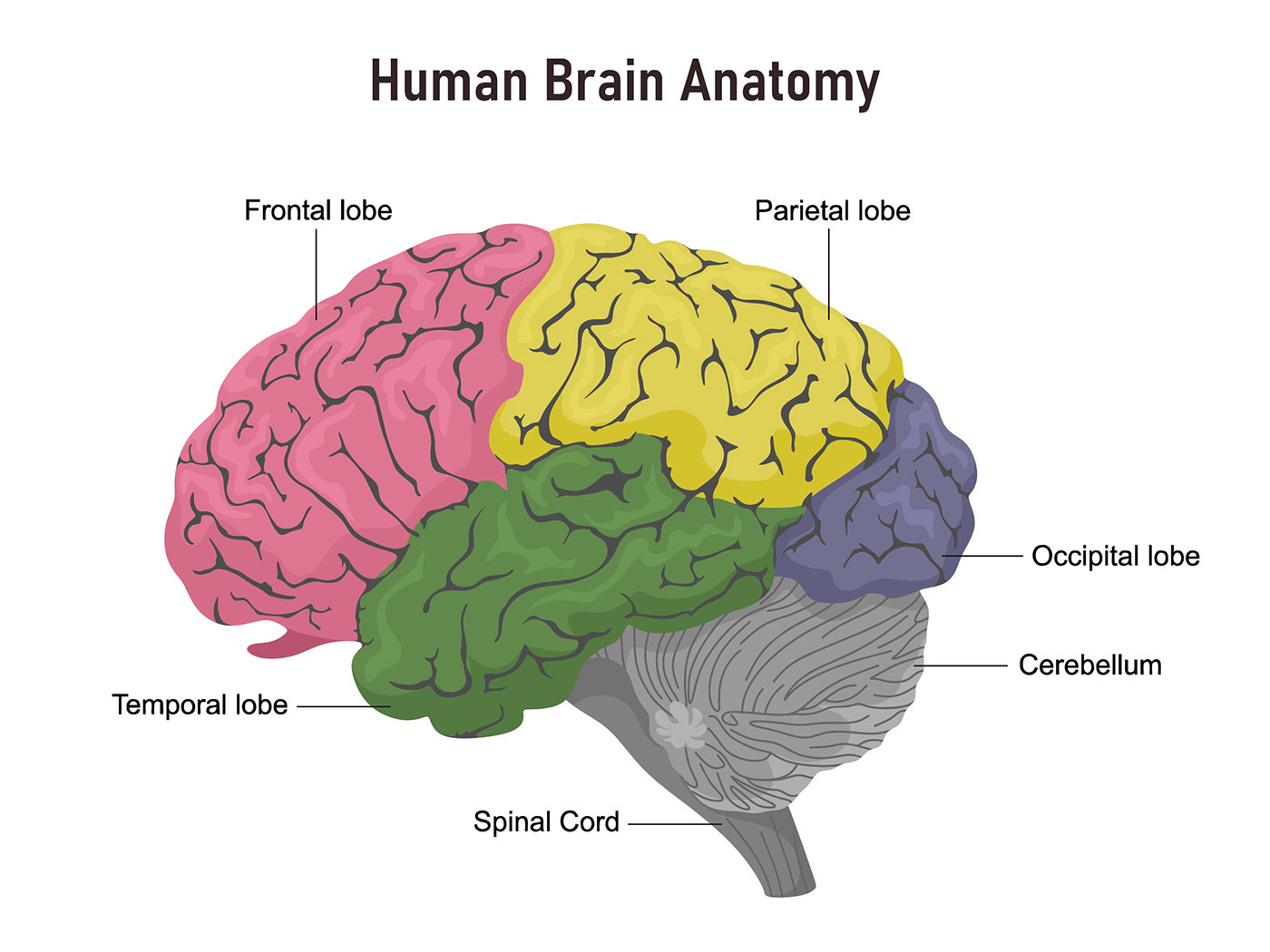

2.1. Cortical Hierarchy in the Human Brain

The primate visual pathway exemplifies the biological hierarchy: V1 → V2 → V4 → IT, transitioning from edge detection to complex object recognition. Laminar organization further refines this: superficial layers (2/3) mediate local processing, while deep layers (5/6) enable long-range integration ([1] David Badre, Mark D'Esposito, Is the rostro-caudal axis of the frontal lobe hierarchical?, 2009). Primary sensory areas (e.g., V1, S1, A1) possess greater cortical surface area and neuron count compared to higher-order association regions, reflecting the demands of high-dimensional concrete encoding ([2] Daniel J. Felleman, David C. Van Essen, Distributed Hierarchical Processing in the Primate Cerebral Cortex, 1991; [3] David H. Hubel, Torsten N. Wiesel, Receptive fields, binocular interaction and functional architecture in the cat's visual cortex, 1962).

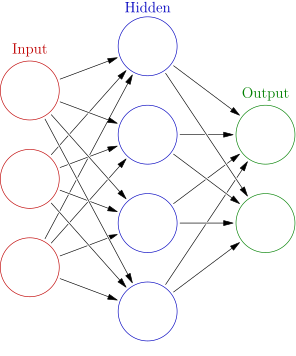

2.2. Artificial Neural Networks (ANNs)

In feedforward ANNs, information propagates through successive layers: early layers encode edges or textures; intermediate layers capture shapes; deep layers represent objects or categories. This architecture supports functionally segregated feature extraction and integration.

3. Radial Functional Hierarchy

Radial hierarchy denotes a center–periphery gradient in which:

- Peripheral cortices (primary sensory areas) handle detailed, high-dimensional information with large surface area and neuron count. They get the information sent from the eyes, nose, ears, tongue, skin and nerves.

- Central structures (hippocampus, thalamus, cingulate cortex) congregate multimodal inputs for abstract reasoning and memory solidification ([4] S. Murray Sherman, The thalamus is more than just a relay, 2007).

Neuron layers closer to the center have lower number of intra-layer connections compared to neuron layers closer to the outer surface. These association regions serve as hubs for convergence of multimodal input from all lobes for amalgamation. These central structures facilitate higher-order cognition by operating on compressed, generalized representations and support complex cross-domain integration ([5] Larry R. Squire, Eric R. Kandel, Memory: From mind to molecules, 2009).

Information flows radially inward from peripheral sensory cortices through intermediate association areas to these central hub structures where cross-modal abstraction and memory consolidation occur—yielding a continuum from concrete sensation to abstract cognition. This creates a functional gradient from concrete sensory processing at the periphery of the processing hierarchy to abstract conceptual processing at the center of cognitive control.

4. Energy Dynamics and Cognitive Effort

Thermodynamically, energy can be distinguished as:

- Potential energy (syntropic concentration): accumulation of metabolic resources (ATP, glucose) during effortful, abstract thought.

- Kinetic energy (entropic dissipation): sensory/motor-driven release of energy during concrete processing.

The two flower videos serve as metaphoric visuals for understanding the energetics of cognitive effort.

Neil Bromhall

Syntropy (budding, energetic potential or concentration)—etymologically from Greek syn- (“together”) and tropos (“turning, transformation”)—denotes a convergent, life-sustaining transformation of increasing order, commonly associated with the building together of structures into organization.

Syntropy is a measure of the order or energy aggregation in a system, while potential energy is the energy an object has potential to possess due to its position or state. There's a relationship between them: generally, as a system's potential energy increases (due to organization or configuration), its syntropy also tends to increase, reflecting a greater aggregation of energy. Potential energy can be thought of as the energy that has potential to organize together mind and matter, constructing order and information.

Neil Bromhall

In contrast, entropy (decaying, energetic kinesis or dissipation)—etymologically from Greek en- (“in”) and tropos (“turning, transformation”)—denotes a divergent, life-eroding transformation of decreasing order, commonly associated with the breaking apart of structures into disorganization.

Entropy is a measure of the disorder or energy dispersal in a system, while kinetic energy is the energy an object possesses due to its motion or temperature. There's a relationship between them: generally, as a system's kinetic energy increases (due to disorder or randomization), its entropy also tends to increase, reflecting a greater dispersal of energy. Kinetic energy can be thought of as the energy that has kinesis to disorganize away mind and matter, destroying order and information.

In the brain syntropy manifests when energy is conserved together, whereas entropy manifests when energy is squandered away.

The blooming flowers symbolize the syntropic use of potential energy as an organizing force. The wilting flowers symbolize the entropic use of kinetic energy as a disorganizing force.

The juxtaposition of these two floral stages effectively captures the dual energetics of cognition:

- Syntropic energy accumulates and organizes, like the blossoming rose: beautiful, symmetrical, and metabolically expensive—paralleling the effort of focused thought.

- Entropic energy releases and disorganizes, like the wilted flower: collapsing form, spent vitality—analogous to the relief or release after expressive or reactive tasks.

This metaphor supports a thermodynamically grounded view of cognitive effort as energetic modulation—between intentional accumulation (syntropy) and spontaneous expenditure (entropy).

When we think abstractly (generally harder to do), we are concentrating—metabolic energy consumption increases in deeper brain regions involved in executive control and abstract reasoning, accumulating potential energy through syntropic processes that resist natural entropy.

When we think concretely (generally easier to do), we are relaxing—energy consumption shifts toward sensory and motor cortices, releasing this potential energy as kinetic energy in an entropic dissipation toward the outermost surface.

Potential energy accumulates against the natural tendency toward entropy. At the metabolic level, higher-order cognitive processes require more glucose and ATP than basic sensory processing ([6] Christopher W. Kuzawa, Harry T. Chugani, Lawrence I. Grossman, Leonard Lipovich, Otto Muzik, Patrick R. Hof, Derek E. Wildman, Chet C. Sherwood, William R. Leonard, Nicholas Lange, Metabolic costs and evolutionary implications of human brain development, 2014; [7] Pierre J. Magistretti, Igor Allaman, A cellular perspective on brain energy metabolism and functional imaging, 2015).

That's why concentration takes effort and relaxation is easier, even spontaneous to do. This corresponds to ATP and glucose concentration gradients being built up during effortful cognition and released during relaxed processing ([8] Marcus E. Raichle, Debra A. Gusnard, Appraising the brain's energy budget, 2002).

Empirical evidence shows prefrontal and default-mode regions consume more glucose than sensory cortices during complex tasks, aligning with increased potential-energy build-up ([9] Daniel S. Margulies, Satrajit S. Ghosh, Alexandros Goulas, Marcel Falkiewicz, Julia M. Huntenburg, Georg Langs, Gleb Bezgin, Simon B. Eickhoff, F. Xavier Castellanos, Michael Petrides, Elizabeth Jefferies, Jonathan Smallwood, Situating the default-mode network along a principal gradient of macroscale cortical organization, 2016).

The persistent concentration of metabolic energy in centralized neural networks, creating coherent oscillatory patterns and integrated information processing, distinguishes living cognitive systems from passive information processors. This aligns with thermodynamic definitions of life as negentropy-generating systems that concentrate energy and maintain organization against entropic decay ([10] Erwin Schrödinger, What is life?, 1944; [11] Grégoire Nicolis, Ilya Prigogine, Self-Organization in Nonequilibrium Systems: From Dissipative Structures to Order through Fluctuations, 1977).

5. Lateral Propagation and Agency

Lateral propagation—information flow within a given layer—differs across domains. Biological systems self-regulate lateral signaling via metabolic and synaptic plasticity, enabling homeostatic agency. In contrast, transformer-based ANNs implement lateral flow through explicit attention mechanisms, requiring external computation rather than intrinsic energy regulation.

Living systems are negentropy engines - they concentrate energy and create order ([10] Erwin Schrödinger, What is life?, 1944; [11] Grégoire Nicolis, Ilya Prigogine, Self-Organization in Nonequilibrium Systems: From Dissipative Structures to Order through Fluctuations, 1977). Schrödinger’s "What is Life?" (1944) defined life as systems that avoid decay by "drinking orderliness from the environment”. Prigogine's dissipative structures show how life maintains organization through energy flow.

Neural oscillations create coherent, synchronized activity (concentrated energy). Consciousness correlates with integrated information across brain networks ([12] Giulio Tononi, Consciousness as Integrated Information: a Provisional Manifesto, 2008; [13] Randy L. Buckner, Daniel C. Carroll, Self-projection and the brain, 2007). Anesthesia disrupts this integration, reducing "inward concentration”.

Furthermore, the control of energy propagation—radial (across spherical layers) and lateral (within the same spherical layer)—is a critical determinant of agency. Agency, in this context, is the ability to direct energy or information flow intentionally.

Lateral propagation operates differently in biological and artificial systems. In transformers, attention mechanisms actually enable sophisticated lateral information flow across positions within the same layer before passing to subsequent layers.

However, this lateral propagation in machines is computationally controlled rather than energetically self-regulating as in biological systems.

This distinction—between computational control and biological energy regulation—highlights fundamental differences in how biological and artificial systems manage information flow, with implications for both neuroscience and AI development.

6. Reasoning as Radial Movement

-

Induction (concrete-to-abstract): radial inward traversal, concentrating from diverse instances to infer general principles.

Inductive reasoning is making a general abstract conclusion from specific concrete observed instances—i.e., you lead yourself to an inward layer.

-

Deduction (abstract-to-concrete): radial outward flow, generating specific predictions from overarching laws.

Deductive reasoning is making specific concrete conclusions from a general abstract observed law or principle—i.e., you lead yourself to an outward layer.

A single regularity that can generate multiple instances of the entity is like an abstract pattern generating multiple concrete instances of that pattern. We concentrate to induce the underlying abstract regularity and relax in different ways to deduce different variations of the overlying concrete instances. This agrees with the observation that we have to think to break down the concrete into abstract, but the subsequent transition to the concrete instances that we have perceived or conceived in past is spontaneous on intentional relaxation.

This spherical metaphor encapsulates the asymmetry of effort: inward concentration (induction) is metabolically demanding; outward relaxation (deduction) is comparatively effortless.

Hallucinations on the other hand are outward relaxations to fanciful concretions, instances that we haven't perceived or conceived in past or which we have perceived or conceived in past but through hallucination only. So the transition is hence not spontaneous but rather very effortful to do.

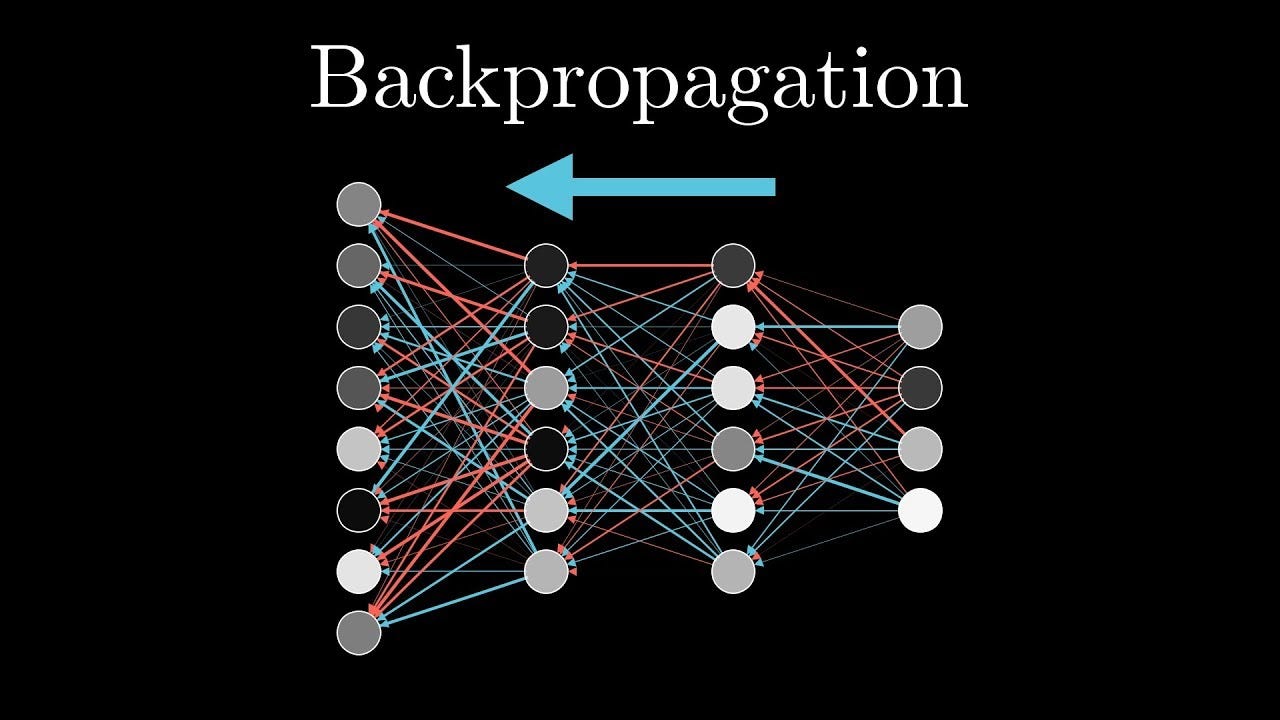

7. Gradient Descent and Backpropagation

In ANNs, forward propagation maps inputs to outputs; backpropagation adjusts weights via error gradients. Analogously, the brain updates synaptic configurations upon receiving feedback, effectuating a form of biological gradient descent centered in integrative hubs.

We predict something when we write an answer down, or predict the next word or phrase while reading. And then when we see the correct answer, or word or phrase afterward, we see the loss or error gradient and correct our neural configuration somewhat—i.e., we descend the gradient and make our next prediction more aligned with the correct value. This biological analog parallels how regularities emerge in central hubs and propagate outward as refined predictions. This is gradient descent in AI.

How does this happen? This underlying regularity rests near the center of the brain and can generate multiple data by virtue of outward propagation, which is loosely analogous to backpropagation in ANNs. Backpropagation is just concretion in outward direction from the incorrect answers or outputs to the input relaxing in different ways to make the brain arrive at the correct abstraction or output on concentration or forward propagation—i.e., reduce loss function by concentrating to the correct generalizable abstraction or regularity after repeated bidirectional propagations.

8. Implications for Intelligence and Consciousness

True intelligence, and perhaps consciousness, may hinge on active energy control—the capacity to sustain negentropic states via radially and laterally regulated energy flows. Biological systems inherently generate order against entropy, whereas ANNs rely on external optimization algorithms.

The proposed spherical model suggests that true intelligence requires not just the ability to process information hierarchically, but the capacity to actively direct energy flow both radially and laterally ([14] John Duncan, Adrian M. Owen, Common regions of the human frontal lobe recruited by diverse cognitive demands, 2000). While current artificial systems excel at passive propagation through their layered architectures, they lack the biological stability of active energy control that characterizes living systems.

This energy-centric view of cognition offers a framework for understanding why concentration feels effortful while relaxation comes naturally, why abstract thinking requires more energy than concrete processing, and perhaps most importantly, why the question of machine consciousness remains so elusive. The persistent inward concentration of energy—life's signature—may be the key distinction between systems that merely process information and those that truly think.

While transformer architectures achieve lateral information flow through attention mechanisms, they lack the thermodynamic self-organization that characterizes biological energy concentration ([15] Karl Friston, The free-energy principle: a unified brain theory?, 2010; [16] Inês Hipólito, Maxwell J. D. Ramstead, Axel Constant, Karl Friston, Cognition coming about: self-organisation and free-energy, 2020). Current AI systems require external computational control rather than intrinsically generating negentropy.

9. Predictions and Future Directions

- Neuroimaging: fMRI should reveal elevated metabolic activity in hippocampal–thalamic circuits during abstract tasks versus sensory cortices in concrete tasks.

- Neuromodulation: Disrupting central hubs (e.g., via TMS) will selectively impair abstract reasoning.

- AI Design: Incorporating thermodynamic constraints and self-organizing lateral dynamics may enhance machine agency.

10. Conclusion

The proposed spherical model unites anatomical, computational, and energetic perspectives to propose a novel framework for cognition. By foregrounding radial and lateral energy propagation, it delineates why abstract thought demands effort, highlights agency as energy self-regulation, and offers pathways for advancing both neuroscience and artificial intelligence.

This paper presents a thermodynamically-grounded theory of cognition with anatomically accurate center-periphery model with limbic/thalamic hub), testable predictions about metabolic patterns, theoretical distinction between biological negentropy and artificial computation, and citations spanning neuroscience, thermodynamics, and consciousness studies.

11. References

- David Badre, Mark D'Esposito, Is the rostro-caudal axis of the frontal lobe hierarchical?, Nature Reviews Neuroscience, Volume 10, Issue 9, September 2009, Pages 659–669, https://doi.org/10.1038/nrn2667

- Daniel J. Felleman, David C. Van Essen, Distributed Hierarchical Processing in the Primate Cerebral Cortex, Cerebral Cortex, Volume 1, Issue 1, January 1991, Pages 1–47, https://doi.org/10.1093/cercor/1.1.1-a

- David H. Hubel, Torsten N. Wiesel, Receptive fields, binocular interaction and functional architecture in the cat's visual cortex, The Journal of Physiology, Volume 160, Issue 1, January 1962, Pages 106–154, https://doi.org/10.1113/jphysiol.1962.sp006837

- S. Murray Sherman, The thalamus is more than just a relay, Current Opinion in Neurobiology, Volume 17, Issue 4, August 2007, Pages 417–422, https://doi.org/10.1016/j.conb.2007.07.003

- Larry R. Squire, Eric R. Kandel, Memory: From mind to molecules, Scientific American Library, New York, 2009

- Christopher W. Kuzawa, Harry T. Chugani, Lawrence I. Grossman, Leonard Lipovich, Otto Muzik, Patrick R. Hof, Derek E. Wildman, Chet C. Sherwood, William R. Leonard, Nicholas Lange, Metabolic costs and evolutionary implications of human brain development, Proceedings of the National Academy of Sciences of the United States of America, Volume 111, Issue 36, September 2014, Pages 13010–13015, https://doi.org/10.1073/pnas.1323099111

- Pierre J. Magistretti, Igor Allaman, A cellular perspective on brain energy metabolism and functional imaging, Neuron, Volume 86, Issue 4, May 2015, Pages 883–901, https://doi.org/10.1016/j.neuron.2015.03.035

- Marcus E. Raichle, Debra A. Gusnard, Appraising the brain's energy budget, Proceedings of the National Academy of Sciences of the United States of America, Volume 99, Issue 16, August 2002, Pages 10237–10239, https://doi.org/10.1073/pnas.172399499

- Daniel S. Margulies, Satrajit S. Ghosh, Alexandros Goulas, Marcel Falkiewicz, Julia M. Huntenburg, Georg Langs, Gleb Bezgin, Simon B. Eickhoff, F. Xavier Castellanos, Michael Petrides, Elizabeth Jefferies, Jonathan Smallwood, Situating the default-mode network along a principal gradient of macroscale cortical organization, Proceedings of the National Academy of Sciences of the United States of America, Volume 113, Issue 44, November 2016, Pages 12574–12579, https://doi.org/10.1073/pnas.1608282113

- Erwin Schrödinger, What is life?, Cambridge University Press, Cambridge, 1944, https://doi.org/10.1017/CBO9781139644129

- Grégoire Nicolis, Ilya Prigogine, Self-Organization in Nonequilibrium Systems: From Dissipative Structures to Order through Fluctuations, Wiley, New York, 1977

- Giulio Tononi, Consciousness as Integrated Information: a Provisional Manifesto, The Biological Bulletin, Volume 215, Issue 3, December 2008, Pages 216–242, https://doi.org/10.2307/25470707

- Randy L. Buckner, Daniel C. Carroll, Self-projection and the brain, Trends in Cognitive Sciences, Volume 11, Issue 2, February 2007, Pages 49–57, https://doi.org/10.1016/j.tics.2006.11.004

- John Duncan, Adrian M. Owen, Common regions of the human frontal lobe recruited by diverse cognitive demands, Trends in Neurosciences, Volume 23, Issue 10, October 2000, Pages 475–483, https://doi.org/10.1016/S0166-2236(00)01633-7

- Karl Friston, The free-energy principle: a unified brain theory?, Nature Reviews Neuroscience, Volume 11, Issue 2, February 2010, Pages 127–138, https://doi.org/10.1038/nrn2787

- Inês Hipólito, Maxwell J. D. Ramstead, Axel Constant, Karl Friston, Cognition coming about: self-organisation and free-energy, arXiv preprint, July 2020, https://doi.org/10.48550/arXiv.2007.15205